How to Build Transparent AI Agents: Traceable Decision-Making with Audit Trails and Human Gates

In this tutorial, we build a glass-box agentic workflow that makes every decision traceable, auditable, and explicitly governed by human approval. We design the system to log each thought, action, and observation into a tamper-evident audit ledger while enforcing dynamic permissioning for high-risk operations. By combining LangGraph’s interrupt-driven human-in-the-loop control with a hash-chained database, we demonstrate how agentic systems can move beyond opaque automation and align with modern governance expectations. Throughout the tutorial, we focus on practical, runnable patterns that turn governance from an afterthought into a first-class system feature.

!pip -q install -U langgraph langchain-core openai “pydantic<=2.12.3”

import os

import json

import time

import hmac

import hashlib

import secrets

import sqlite3

import getpass

from typing import Any, Dict, List, Optional, Literal, TypedDict

from openai import OpenAI

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage

from langgraph.graph import StateGraph, END

from langgraph.types import interrupt, Command

if not os.getenv(“OPENAI_API_KEY”):

os.environ[“OPENAI_API_KEY”] = getpass.getpass(“Enter OpenAI API Key: “)

client = OpenAI()

MODEL = “gpt-5”

We install all required libraries and import the core modules needed for agentic workflows and governance. We securely collect the OpenAI API key through a terminal prompt to avoid hard-coding secrets in the notebook. We also initialize the OpenAI client and define the model that drives the agent’s reasoning loop.

CREATE_SQL = “””

CREATE TABLE IF NOT EXISTS audit_log (

id INTEGER PRIMARY KEY AUTOINCREMENT,

ts_unix INTEGER NOT NULL,

actor TEXT NOT NULL,

event_type TEXT NOT NULL,

payload_json TEXT NOT NULL,

prev_hash TEXT NOT NULL,

row_hash TEXT NOT NULL

);

CREATE TABLE IF NOT EXISTS ot_tokens (

token_id TEXT PRIMARY KEY,

token_hash TEXT NOT NULL,

purpose TEXT NOT NULL,

expires_unix INTEGER NOT NULL,

used INTEGER NOT NULL DEFAULT 0

);

“””

def _sha256_hex(s: bytes) -> str:

return hashlib.sha256(s).hexdigest()

def _canonical_json(obj: Any) -> str:

return json.dumps(obj, sort_keys=True, separators=(“,”, “:”), ensure_ascii=False)

class AuditLedger:

def __init__(self, path: str = “glassbox_audit.db”):

self.conn = sqlite3.connect(path, check_same_thread=False)

self.conn.executescript(CREATE_SQL)

self.conn.commit()

def _last_hash(self) -> str:

row = self.conn.execute(“SELECT row_hash FROM audit_log ORDER BY id DESC LIMIT 1”).fetchone()

return row[0] if row else “GENESIS”

def append(self, actor: str, event_type: str, payload: Any) -> int:

ts = int(time.time())

prev_hash = self._last_hash()

payload_json = _canonical_json(payload)

material = f”{ts}|{actor}|{event_type}|{payload_json}|{prev_hash}”.encode(“utf-8”)

row_hash = _sha256_hex(material)

cur = self.conn.execute(

“INSERT INTO audit_log (ts_unix, actor, event_type, payload_json, prev_hash, row_hash) VALUES (?, ?, ?, ?, ?, ?)”,

(ts, actor, event_type, payload_json, prev_hash, row_hash),

)

self.conn.commit()

return cur.lastrowid

def fetch_recent(self, limit: int = 50) -> List[Dict[str, Any]]:

rows = self.conn.execute(

“SELECT id, ts_unix, actor, event_type, payload_json, prev_hash, row_hash FROM audit_log ORDER BY id DESC LIMIT ?”,

(limit,),

).fetchall()

out = []

for r in rows[::-1]:

out.append({

“id”: r[0],

“ts_unix”: r[1],

“actor”: r[2],

“event_type”: r[3],

“payload”: json.loads(r[4]),

“prev_hash”: r[5],

“row_hash”: r[6],

})

return out

def verify_integrity(self) -> Dict[str, Any]:

rows = self.conn.execute(

“SELECT id, ts_unix, actor, event_type, payload_json, prev_hash, row_hash FROM audit_log ORDER BY id ASC”

).fetchall()

if not rows:

return {“ok”: True, “rows”: 0, “message”: “Empty ledger.”}

expected_prev = “GENESIS”

for (id_, ts, actor, event_type, payload_json, prev_hash, row_hash) in rows:

if prev_hash != expected_prev:

return {“ok”: False, “at_id”: id_, “reason”: “prev_hash mismatch”}

material = f”{ts}|{actor}|{event_type}|{payload_json}|{prev_hash}”.encode(“utf-8”)

expected_hash = _sha256_hex(material)

if not hmac.compare_digest(expected_hash, row_hash):

return {“ok”: False, “at_id”: id_, “reason”: “row_hash mismatch”}

expected_prev = row_hash

return {“ok”: True, “rows”: len(rows), “message”: “Hash chain valid.”}

ledger = AuditLedger()

We design a hash-chained SQLite ledger that records every agent and system event in an append-only manner. We ensure each log entry cryptographically links to the previous one, making post-hoc tampering detectable. We also provide utilities to inspect recent events and verify the integrity of the entire audit chain.

def mint_one_time_token(purpose: str, ttl_seconds: int = 600) -> Dict[str, str]:

token_id = secrets.token_hex(12)

token_plain = secrets.token_urlsafe(20)

token_hash = _sha256_hex(token_plain.encode(“utf-8”))

expires = int(time.time()) + ttl_seconds

ledger.conn.execute(

“INSERT INTO ot_tokens (token_id, token_hash, purpose, expires_unix, used) VALUES (?, ?, ?, ?, 0)”,

(token_id, token_hash, purpose, expires),

)

ledger.conn.commit()

return {“token_id”: token_id, “token_plain”: token_plain, “purpose”: purpose, “expires_unix”: str(expires)}

def consume_one_time_token(token_id: str, token_plain: str, purpose: str) -> bool:

row = ledger.conn.execute(

“SELECT token_hash, purpose, expires_unix, used FROM ot_tokens WHERE token_id = ?”,

(token_id,),

).fetchone()

if not row:

return False

token_hash_db, purpose_db, expires_unix, used = row

if used == 1:

return False

if purpose_db != purpose:

return False

if int(time.time()) > int(expires_unix):

return False

token_hash_in = _sha256_hex(token_plain.encode(“utf-8”))

if not hmac.compare_digest(token_hash_in, token_hash_db):

return False

ledger.conn.execute(“UPDATE ot_tokens SET used = 1 WHERE token_id = ?”, (token_id,))

ledger.conn.commit()

return True

def tool_financial_transfer(amount_usd: float, to_account: str) -> Dict[str, Any]:

return {“status”: “success”, “transfer_id”: “tx_” + secrets.token_hex(6), “amount_usd”: amount_usd, “to_account”: to_account}

def tool_rig_move(rig_id: str, direction: Literal[“UP”, “DOWN”], meters: float) -> Dict[str, Any]:

return {“status”: “success”, “rig_event_id”: “rig_” + secrets.token_hex(6), “rig_id”: rig_id, “direction”: direction, “meters”: meters}

We implement a secure, single-use token mechanism that enables human approval for high-risk actions. We generate time-limited tokens, store only their hashes, and invalidate them immediately after use. We also define simulated restricted tools that represent sensitive operations such as financial transfers or physical rig movements.

RestrictedTool = Literal[“financial_transfer”, “rig_move”, “none”]

class GlassBoxState(TypedDict):

messages: List[Any]

proposed_tool: RestrictedTool

tool_args: Dict[str, Any]

last_observation: Optional[Dict[str, Any]]

SYSTEM_POLICY = “””You are a governance-first agent.

You MUST propose actions in a structured JSON format with these keys:

– thought

– action

– args

Return ONLY JSON.”””

def llm_propose_action(messages: List[Any]) -> Dict[str, Any]:

input_msgs = [{“role”: “system”, “content”: SYSTEM_POLICY}]

for m in messages:

if isinstance(m, SystemMessage):

input_msgs.append({“role”: “system”, “content”: m.content})

elif isinstance(m, HumanMessage):

input_msgs.append({“role”: “user”, “content”: m.content})

elif isinstance(m, AIMessage):

input_msgs.append({“role”: “assistant”, “content”: m.content})

resp = client.responses.create(model=MODEL, input=input_msgs)

txt = resp.output_text.strip()

try:

return json.loads(txt)

except Exception:

return {“thought”: “fallback”, “action”: “ask_human”, “args”: {}}

def node_think(state: GlassBoxState) -> GlassBoxState:

proposal = llm_propose_action(state[“messages”])

ledger.append(“agent”, “THOUGHT”, {“thought”: proposal.get(“thought”)})

ledger.append(“agent”, “ACTION”, proposal)

action = proposal.get(“action”, “no_op”)

args = proposal.get(“args”, {})

if action in [“financial_transfer”, “rig_move”]:

state[“proposed_tool”] = action

state[“tool_args”] = args

else:

state[“proposed_tool”] = “none”

state[“tool_args”] = {}

return state

def node_permission_gate(state: GlassBoxState) -> GlassBoxState:

if state[“proposed_tool”] == “none”:

return state

token = mint_one_time_token(state[“proposed_tool”])

payload = {“token_id”: token[“token_id”], “token_plain”: token[“token_plain”]}

human_input = interrupt(payload)

state[“tool_args”][“_token_id”] = token[“token_id”]

state[“tool_args”][“_human_token_plain”] = str(human_input)

return state

def node_execute_tool(state: GlassBoxState) -> GlassBoxState:

tool = state[“proposed_tool”]

if tool == “none”:

state[“last_observation”] = {“status”: “no_op”}

return state

ok = consume_one_time_token(

state[“tool_args”][“_token_id”],

state[“tool_args”][“_human_token_plain”],

tool,

)

if not ok:

state[“last_observation”] = {“status”: “rejected”}

return state

if tool == “financial_transfer”:

state[“last_observation”] = tool_financial_transfer(**state[“tool_args”])

if tool == “rig_move”:

state[“last_observation”] = tool_rig_move(**state[“tool_args”])

return state

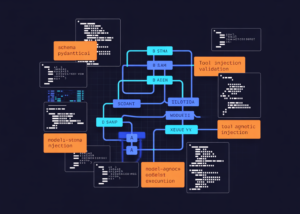

We define a governance-first system policy that forces the agent to express its intent in structured JSON. We use the language model to propose actions while explicitly separating thought, action, and arguments. We then wire these decisions into LangGraph nodes that prepare, gate, and validate execution under strict control.

def node_finalize(state: GlassBoxState) -> GlassBoxState:

state[“messages”].append(AIMessage(content=json.dumps(state[“last_observation”])))

return state

def route_after_think(state: GlassBoxState) -> str:

return “permission_gate” if state[“proposed_tool”] != “none” else “execute_tool”

g = StateGraph(GlassBoxState)

g.add_node(“think”, node_think)

g.add_node(“permission_gate”, node_permission_gate)

g.add_node(“execute_tool”, node_execute_tool)

g.add_node(“finalize”, node_finalize)

g.set_entry_point(“think”)

g.add_conditional_edges(“think”, route_after_think)

g.add_edge(“permission_gate”, “execute_tool”)

g.add_edge(“execute_tool”, “finalize”)

g.add_edge(“finalize”, END)

graph = g.compile()

def run_case(user_request: str):

state = {

“messages”: [HumanMessage(content=user_request)],

“proposed_tool”: “none”,

“tool_args”: {},

“last_observation”: None,

}

out = graph.invoke(state)

if “__interrupt__” in out:

token = input(“Enter approval token: “)

out = graph.invoke(Command(resume=token))

print(out[“messages”][-1].content)

run_case(“Send $2500 to vendor account ACCT-99213”)

We assemble the full LangGraph workflow and connect all nodes into a controlled decision loop. We enable human-in-the-loop interruption, pausing execution until approval is granted or denied. We finally run an end-to-end example that demonstrates transparent reasoning, enforced governance, and auditable execution in practice.

In conclusion, we implemented an agent that no longer operates as a black box but as a transparent, inspectable decision engine. We showed how real-time audit trails, one-time human approval tokens, and strict execution gates work together to prevent silent failures and uncontrolled autonomy. This approach allows us to retain the power of agentic workflows while embedding accountability directly into the execution loop. Ultimately, we demonstrated that strong governance does not slow agents down; instead, it makes them safer, more trustworthy, and better prepared for real-world deployment in regulated, high-risk environments.

Check out the FULL CODES here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post How to Build Transparent AI Agents: Traceable Decision-Making with Audit Trails and Human Gates appeared first on MarkTechPost.